Spark Streaming Programming Guide - Spark 1.1.1 Documentation

Discretized Streams (DStreams)

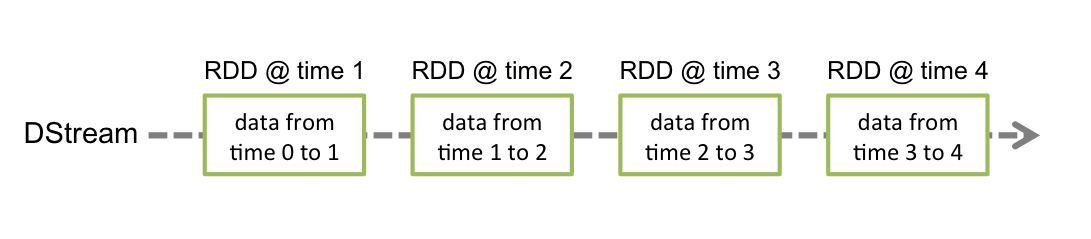

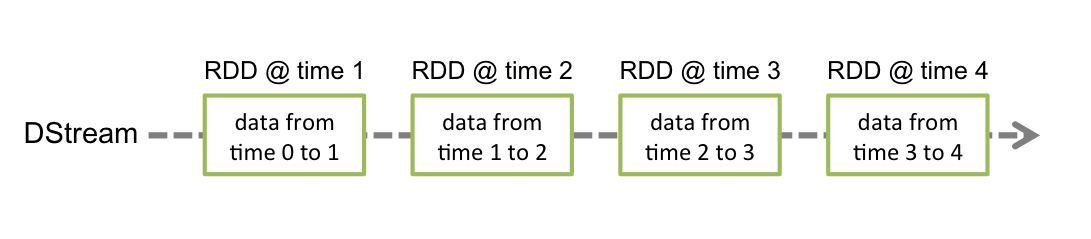

Discretized Stream or DStream is the basic abstraction provided by Spark Streaming. It represents a continuous stream of data, either the input data stream received from source, or the processed data stream generated by transforming the input stream. Internally, a DStream is represented by a continuous series of RDDs, which is Spark’s abstraction of an immutable, distributed dataset (see Spark Programming Guidefor more details). Each RDD in a DStream contains data from a certain interval, as shown in the following figure.

File Streams: For reading data from files on any file system compatible with the HDFS API (that is, HDFS, S3, NFS, etc.), a DStream can be created as

File Streams: For reading data from files on any file system compatible with the HDFS API (that is, HDFS, S3, NFS, etc.), a DStream can be created as

Read full article from Spark Streaming Programming Guide - Spark 1.1.1 Documentation

Discretized Streams (DStreams)

Discretized Stream or DStream is the basic abstraction provided by Spark Streaming. It represents a continuous stream of data, either the input data stream received from source, or the processed data stream generated by transforming the input stream. Internally, a DStream is represented by a continuous series of RDDs, which is Spark’s abstraction of an immutable, distributed dataset (see Spark Programming Guidefor more details). Each RDD in a DStream contains data from a certain interval, as shown in the following figure.

Input DStreams

Input DStreams are DStreams representing the stream of raw data received from streaming sources. Spark Streaming has two categories of streaming sources.

- Basic sources: Sources directly available in the StreamingContext API. Example: file systems, socket connections, and Akka actors.

- Advanced sources: Sources like Kafka, Flume, Kinesis, Twitter, etc. are available through extra utility classes. These require linking against extra dependencies as discussed in the linking section.

Every input DStream (except file stream) is associated with a single Receiver object which receives the data from a source and stores it in Spark’s memory for processing. So every input DStream receives a single stream of data. Note that in a streaming application, you can create multiple input DStreams to receive multiple streams of data in parallel.

val lines = ssc.socketTextStream("localhost", 9999)

ssc.socketTextStream(...) creates a DStream from text data received over a TCP socket connectionstreamingContext.fileStream[keyClass, valueClass, inputFormatClass](dataDirectory)Spark Streaming will monitor the directorydataDirectoryand process any files created in that directory (files written in nested directories not supported). Note that- The files must have the same data format.

- The files must be created in the

dataDirectoryby atomically moving or renaming them into the data directory. - Once moved, the files must not be changed. So if the files are being continuously appended, the new data will not be read.

For simple text files, there is an easier methodstreamingContext.textFileStream(dataDirectory). And file streams do not require running a receiver, hence does not require allocating cores.- Streams based on Custom Actors: DStreams can be created with data streams received through Akka actors by using

streamingContext.actorStream(actorProps, actor-name). See the Custom Receiver Guide for more details. - Queue of RDDs as a Stream: For testing a Spark Streaming application with test data, one can also create a DStream based on a queue of RDDs, using

streamingContext.queueStream(queueOfRDDs). Each RDD pushed into the queue will be treated as a batch of data in the DStream, and processed like a stream.

Read full article from Spark Streaming Programming Guide - Spark 1.1.1 Documentation

No comments:

Post a Comment